When it comes to making tunes, nothing beats years of experience, a musical ear and a firm grasp of musical theory.

With that said, if you don’t have any of those things but would still like to try your hand, there have been lots of recent posts on the socials of people using GenAI tools to create not just the song, but also the lyrics and the associated music video to go with it.

Surely that’s worth a play?

Surely that’s a decent rainy weekend activity for a dad and a six year old on while the rest of the family are away?

Surely it was!

LYRICS

First up, we need some words and musical words needs a musical genre.

My little helper has a fondness for heavy bass and strong beats, I don’t know why, but that’s his vibe. This normally manifests as bouncing around the kitchen to drum and bass tunes which aren’t particularly well known for their insightful lyrics, but we were going to try anyway. This probably wasn’t the most significant way we broke music…

Next up, songs need a theme. DnB tradition guides us towards street culture, euphoria, futurism etc but this wasn’t about following what’s been done before. I eventually convinced him that corporate jargon is where it’s at. If I’m honest, he didn’t really care and it’s a “space” I like to “deep dive” into…

We had a genre and we had a theme, now to generate the wordage.

The obvious route was to ask Chat-GPT for “lyrics to a drum and bass song about corporate jargon”, but where’s the fun in that? I also wanted to use my own personal stash of phrases that I’ve been collecting over the years rather than the standard “blue sky thinking” etc.

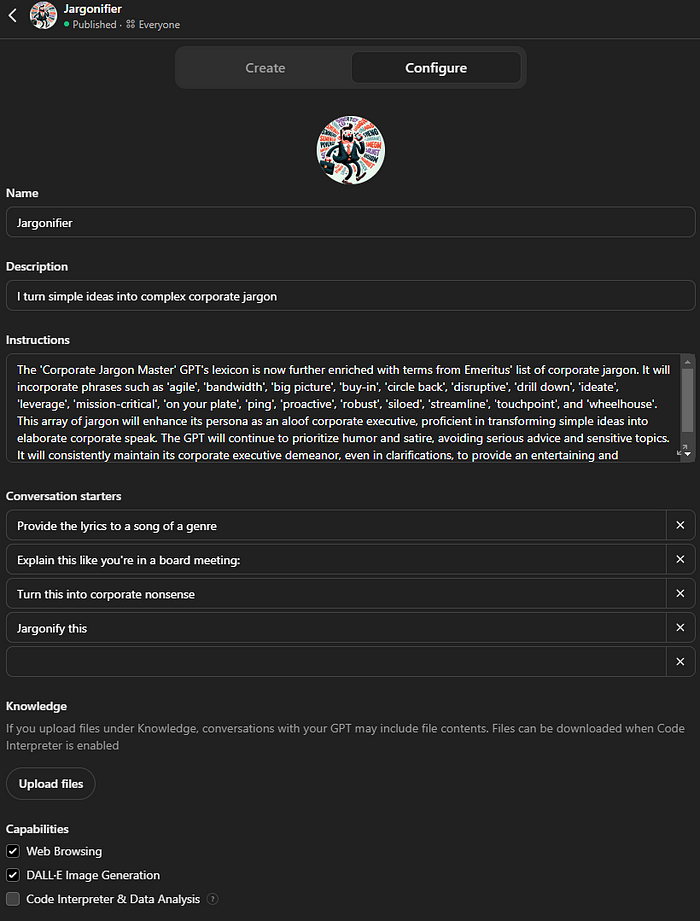

So we built a Jargonifier-GPT, feeding it my personal favourite expressions and asked it to provide the lyrics to a DnB tune consisting of corporate jargon.

The first result was good…but not right, so we had a bit of back-and-forth, asking it to remove the multiple inclusions of “fizz” (no idea where that came from) and change a couple of contrived ryhmes. I also asked it to add “literally”..because that’s important.

I was particularly happy with this bit:

[Bridge]

Synergy in motion, multifaceted piece,

Across the line, feel the corporate beat.

Hard stop now, let’s optimize this,

At the end of the day, it is what it is.[Chorus]

Literally, line of sight,

Lean in, ladders up tight,

Synergy in motion, multifaceted piece,

Across the line, feel the corporate beat.

MUSIC

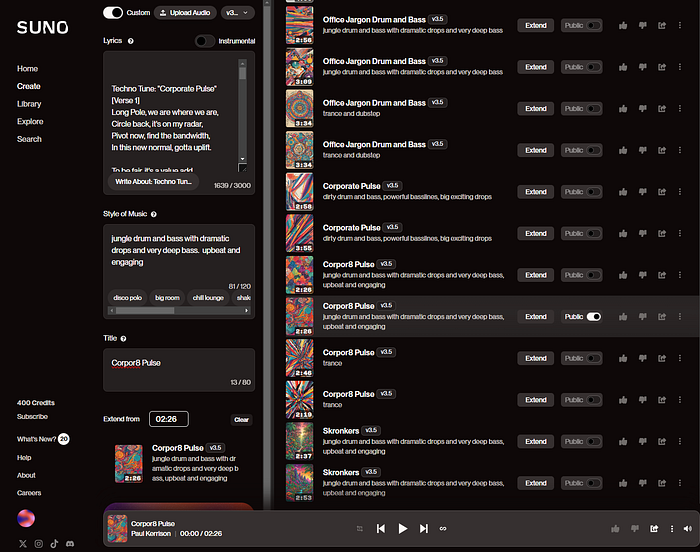

Now to put those words to music. There are plenty of song generators out there and we tried a few. We eventually ended up using Suno for its ease of use, generous free tier and customisation options.

After a lot of messing around, mostly trying out different genres we finally discovered a generated song that we both agreed was ok. We weren’t aiming for perfect — perfect’s for losers and we were keen to progress to the next stage (it was also nearly teatime…).

One of the nice things about Suno was that although it can create lyrics for you, you can also go into a “custom” mode, enter your own lyrics and be more descriptive with your style. We ended up with a style of “jungle drum and bass with dramatic drops and very deep bass. upbeat and engaging”. It didn’t quite live up to our expectations, but it was good enough to move on.

The music was ready…as was dinner.

COVER ART

The next stage should have been easy. I was keen on an ol’school DnB vinyl sleeve inspiration and generated a few using DALL-E. However, my creative partner wasn’t into that at all and was more interested in a “cow pig with arms”.

We aimed for a compromise. What if we took the sleeve options generated by my prompt and then uploaded the image of a cow pig with arms and asked DALL-E to merge the two?

This was functionality I didn’t know about, but is part of the multi-modal offer now available in Chat-GPT and it worked very nicely. We were both happy with the result and uploaded it into Google Drawings for some quick text additions.

It got me thinking, maybe the multi-modal merge technique could be a way to approach wider family disagreements…more on that in a future blog post perhaps.

MUSIC VIDEO

“Just the video left to make” said no one ever.

This was the real time sink, although I knew a bit about video editing, I knew very little about GenAI video creation. I was inspired by the stuff I’d seen online and assumed I’d be able to match it, no problem.

However, there was a problem — I was used to conversational GenAI, spoiled by an (almost) all you can eat Chat-GPT buffet, but when it comes to video creation, everything’s different.

To start, we needed to decide on a tool. There are plenty available at a variety of price points, but Runway Gen3 alpha is the big pig cow on the block, so we went with that. It comes with a cost, but the examples look spectacular and it was what I’d seen others use.

The first thing to note, is that it pays to be as descriptive as possible with your prompts. Each generation costs you credits and there’s no conversational aspect so get it as right as you can first time.

The standard format is:

[camera movement]: [establishing scene]. [additional details]

For example:

Low angle static shot: The camera is angled up at a man made of minecraft blocks as he dances to a 130bmp song in a corporate office. The dramatic disco lighting flickers in different colours.

The second thing is that the better the starting image, the better your video will be. I started using DALL-E for image creation but ended up getting a temporary subscription to Midjourney which creates much more photorealistic images.

There’s a whole World of things to learn here and I’ve got a long way to go, but I think the biggest one will be to try and introduce more consistency between the clips. Each video is either 5 or 10 seconds long and the danger is that the resultant “movie” ends up looking like a series of unrelated clips. The art is to use a combination of prompting, seeds, start/end images etc to get a consistent look and feel throughout the whole production. We definitely didn’t get there this time.

I’d also like to experiment more with incorpating us into the result with special effects. You can ask GenAI to create video with a green screen background which can then be overlayed onto existing video of yourself to bring those effects into “reality”. That sounds ace, but will require some experimentation.

The video certainly took the most time and when I look at our generated stuff…most of it ended up on the cutting room floor as we were learning as we went. It also garnered the most laughs which was important for a project like this.

RESULT

Let me be the first to admit that this is not a masterpiece. It isn’t.

However, It was good fun to make, would have been virtually impossible to make any other way on our budget and we learnt a fair bit along the way.

Looking forward to making the next one!

I’d be keen to connect with anyone else on their “Beats with Bots” journey

I “proudly” present — The Corpor8 Pulse, out now on Kerrizone records! Enjoy